Colors

Metrics

Count number of pages scraped by bots over time

Alexandru Puiu

·

January 5, 2023

·

3 min

Tracking number of pages scraped by web crawlers

To count the number of web pages scraped, we can use a simple middleware with a predefined list of known bots, and increment a measurement with IMetricsService everytime a request comes from a bot.

using Microsoft.AspNetCore.Http;

using System.Collections.Generic;

using System.Linq;

using System.Threading.Tasks;

using Toggly.FeatureManagement;

namespace Web.Helpers

{

public class BotTrackerMiddleware

{

private readonly RequestDelegate _next;

private readonly IMetricsService _metricsService;

List<string> _crawlers = new List<string>()

{

"bot","crawler","spider","80legs","baidu","yahoo! slurp","ia_archiver","mediapartners-google",

"lwp-trivial","nederland.zoek","ahoy","anthill","appie","arale","araneo","ariadne",

"atn_worldwide","atomz","bjaaland","ukonline","calif","combine","cosmos","cusco",

"cyberspyder","digger","grabber","downloadexpress","ecollector","ebiness","esculapio",

"esther","felix ide","hamahakki","kit-fireball","fouineur","freecrawl","desertrealm",

"gcreep","golem","griffon","gromit","gulliver","gulper","whowhere","havindex","hotwired",

"htdig","ingrid","informant","inspectorwww","iron33","teoma","ask jeeves","jeeves",

"image.kapsi.net","kdd-explorer","label-grabber","larbin","linkidator","linkwalker",

"lockon","marvin","mattie","mediafox","merzscope","nec-meshexplorer","udmsearch","moget",

"motor","muncher","muninn","muscatferret","mwdsearch","sharp-info-agent","webmechanic",

"netscoop","newscan-online","objectssearch","orbsearch","packrat","pageboy","parasite",

"patric","pegasus","phpdig","piltdownman","pimptrain","plumtreewebaccessor","getterrobo-plus",

"raven","roadrunner","robbie","robocrawl","robofox","webbandit","scooter","search-au",

"searchprocess","senrigan","shagseeker","site valet","skymob","slurp","snooper","speedy",

"curl_image_client","suke","www.sygol.com","tach_bw","templeton","titin","topiclink","udmsearch",

"urlck","valkyrie libwww-perl","verticrawl","victoria","webscout","voyager","crawlpaper",

"webcatcher","t-h-u-n-d-e-r-s-t-o-n-e","webmoose","pagesinventory","webquest","webreaper",

"webwalker","winona","occam","robi","fdse","jobo","rhcs","gazz","dwcp","yeti","fido","wlm",

"wolp","wwwc","xget","legs","curl","webs","wget","sift","cmc"

};

public BotTrackerMiddleware(RequestDelegate next, IMetricsService metricsService)

{

_next = next;

_metricsService = metricsService;

}

/// <summary>

/// Increase measurement for BotScrape metric each time the user agent matches a bot

/// </summary>

/// <param name="context"></param>

/// <returns></returns>

public async Task InvokeAsync(HttpContext context)

{

string ua = context.Request.Headers.UserAgent.FirstOrDefault().ToLower() ?? string.Empty;

if (_crawlers.Exists(x => ua.Contains(x)))

await _metricsService.MeasureAsync("BotScrape", 1);

await _next(context);

}

}

}

Then in Startup.cs we can include our middleware conditionally, based on a feature flag, before we call app.UseEndpoints

app.UseMiddlewareForFeature<BotTrackerMiddleware>(FeatureFlags.BotTracker);

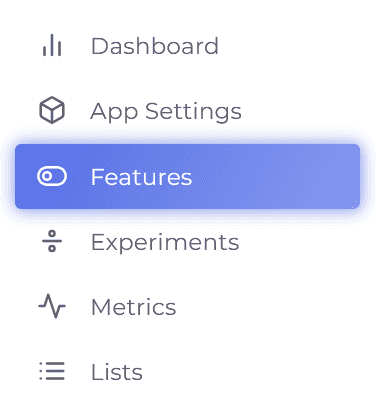

Next, in Toggly we’ll go to Features under our Application

And we’ll add a definition for our BotScrape flag

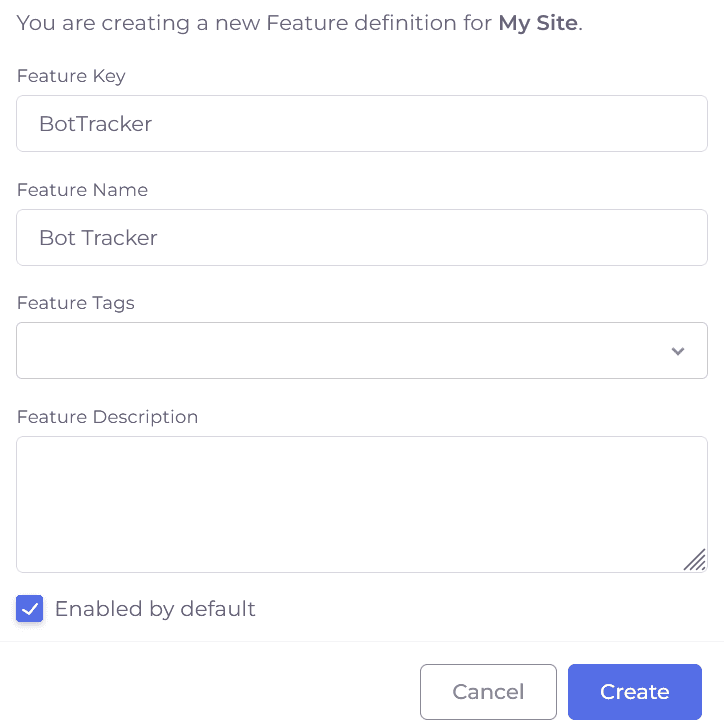

Finally, we’ll define the metric on our Metrics tab

metricstrackingC#